Decide Smarter.

Start Today

Popular Articles

If you are a Data Leader, how many times have you heard from Business Teams that AI gives generic Answers and not specific to my organisational Context? Something that looks like a fancy PoC as a wrapper on LLM doesn't scale adoption ?

This happens because most systems cannot hold your business logic. They read your numbers but not the meaning behind them. They see your metrics but not the relationships that shape them. They can summarise a note or scan a sheet, but they cannot think with your data. They cannot act like an analyst who knows your world.

That missing understanding is what business ontology provides. Ontology is the structure that defines what your metrics mean and how they relate. It is how a context aware AI system keeps track of your definitions, your rules, and the cause effect links that shape decisions. Without that structure, every answer becomes a guess.

If you are a Data Leader, how many times have you heard from Business Teams that AI gives generic answers and not specific to my organisational context? Something that looks like a fancy PoC as a wrapper on LLM does not scale adoption. This is where AI decision making breaks down without context.

This pattern is similar to what we see with LLM-led analytics.

So what's this Delta?

They come from the gap between what a number shows and what the business thinks it means. You see it when a metric moves, and three teams give you three explanations. You see it when a good quarter for one group creates headaches for another. You see it when you realize there is no single place where your company’s logic actually lives.

This is where enterprise reasoning becomes essential.

Why the usual models fall short?

ChatGPT, Claude, and other general-purpose models handle quick tasks well. They can clean up a note or scan a small sheet, but they are not built to understand how your business works. They treat your metrics as isolated labels, forget your rules between sessions, and return different logic each time you ask the same question. That is the gap Green solves.

These models also behave as if every definition is equally valid. If Marketing uses one version of SQL and Sales uses another, they treat both as correct. Nothing alerts you. So the answers sound polished but lead you nowhere.

This is why you get neat paragraphs instead of a real explanation. This is not data reasoning. It is surface text.

What ontology actually is

Ontology is the structure behind your numbers. It defines what each metric means and how one metric influences another. It captures how decisions in one part of the business echo in another. It turns scattered interpretation into a system.

For example, the pipeline affects bookings. Bookings influence revenue. Revenue reacts to pricing, churn, and mix. Retention depends on experience and timing. Margins shift with discount patterns. Acquisition cost changes with channel behavior. These rules already exist. They just are not captured anywhere.

A dashboard shows you the result. Ontology explains what created it.

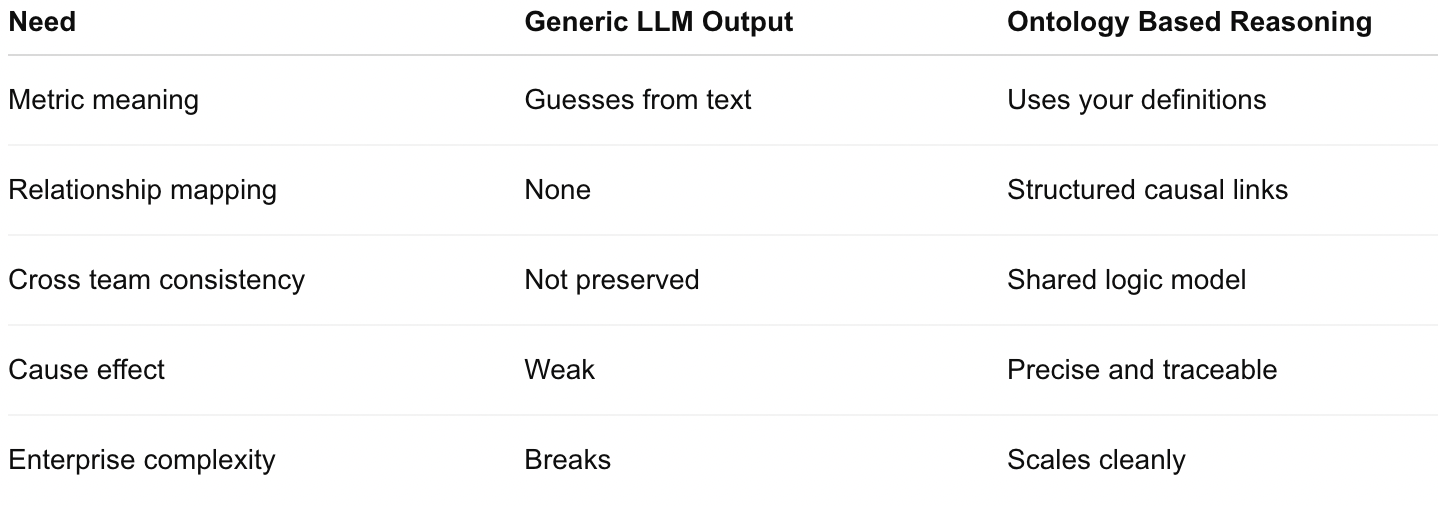

A quick comparison

Here is a view of what ontology provides compared to generic LLM answers.

Where does Ontology stay today, and how does Green build it?

Your rules sit in habits, meetings, and old threads. Your definitions live in slides that nobody updates. Your understanding of cause and effect lives in people’s heads. No tool captures that. No tool holds it. And no tool uses it to produce consistent answers.

Green fixes that with ontology through a 3 Step Process.

- It reads across silos of information across CRM, ERP and Drives to create a Knowledge graph and a causal understanding of Object, Entities, Relationships, Lineage, Governance.

- It ingests any process docs, workflow, OKR, KPIs or multi modal inputs to enhance it further.

- Our FDE sits across key business stakeholders and maps it using our Proprietary AI Ontology Builder.

Every step has trace logs, checks, and approvals, so the structure stays clean and trusted. From here, Green learns your business the way an operator learns it. It reads your tables and sees how CRM fields connect to bookings and how bookings connect to revenue. It notices the patterns your team works with every day. It watches how data shifts over time.

If a slow pipeline in June turns into a revenue dip in September, Green records that link. When you explain why something happened, Green keeps that context and ties it to the right data. Your routines shape the model as well. How often you review numbers, whether you look at segments or regions, which metrics get attention first, all of this guides how Green reads your world. The model gets sharper with each correction, explanation, and decision.

This is how a context aware AI system becomes accurate over time.

When you ask a real question

Consider a question leaders ask often:

“Why did Q3 revenue fall when marketing spend increased?”

A generic model will give you a tidy paragraph with guesses. Green will not.

It reviews your sales, marketing, and customer data.

It checks how spending changed through the quarter.

It studies conversion patterns and pipeline signals.

It connects these movements to the rules it has already learned from you.

If something is missing or two teams define a metric differently, it asks instead of filling the gaps on its own.

The answer reflects your world, not a template. It makes sense in the context of your business, not anyone else’s.

This is the difference between chat output and a reasoning engine.

Memory that actually reduces friction

Green does not keep everything you say. It keeps the parts that matter. When you confirm a definition, Green stores it. When you correct an assumption, Green updates the structure. When you explain the reason behind a shift, Green links it to the data.

If two departments use the same term differently, Green flags it. You choose the correct version. From that moment on, every analysis follows that rule. That one behavior removes a huge amount of repeated work.

Teams stop re-explaining basics. Reviews become shorter. The discussion shifts from “what does this mean” to “what do we do now.”

What Green will and will not do?

Green will build a shared logic model for your company. It will trace every answer to your data and the rules it learned from you. It will ask when something is unclear or missing. It will get better with repeated use.

Green will not invent explanations. It will not ignore conflicting definitions, treat loose patterns as facts, or pretend to know your business when it does not. It is designed to stay grounded. You can question the answer, see how it was reached, and decide whether to adjust the logic.

Try a real question.

Why ontology matters now?

Companies do not fail because they lack data. They fail because every team interprets the data differently. The logic behind the numbers is scattered and inconsistent. As teams grow, the problem gets worse. More roles, more tools, more interpretation layers, more debate.

Ontology solves this by giving the company one structure that everyone uses. Once that structure exists, the benefits show up quickly. Answers arrive faster. Definitions stop drifting. New hires ramp faster. Meetings shrink. Decisions move. Leaders get explanations they can act on.

Ontology is not theoretical. It is practical. It is the missing layer that turns data into shared understanding.

Understand what shifts your metrics and why they change.

How is ontology different from a dashboard or BI layer?

A dashboard shows numbers. Ontology explains how those numbers depend on each other and why they shift.

How does Green learn my business?

Green learns from your data structure, your time-based patterns, your conversations, and your workflows. Together they form a logic model.

Does Green store memory across sessions?

Yes. Green saves your rules, definitions, and relationships. It does not restart from scratch.

What happens when two teams use different definitions?

Green catches the conflict and asks which version is correct. Once you confirm, the model updates.

Can Green detect time-based patterns?

Yes. When data contains dates, Green identifies how one period influences another.

How does Green handle missing context?

It does not try to fill gaps. It asks for what it needs.

Can Green help multiple teams at once?

Yes. All teams use the same logic model. Answers stay consistent.

Can Green read unstructured data like slides?

Yes. Slides, notes, and reports help Green understand how your business reasons.

Is Green transparent in how it reasons?

Yes. Every answer comes with a clear reasoning trail.

What if my business changes?

You update your data or explain the change. Green updates the logic.

Shaoli Paul, Product Marketing Manager, DecisionX

Shaoli Paul is a content and product marketing specialist with 4.5+ years of experience in B2B AI SaaS and fintech, working at the intersection of SEO, product messaging, and demand generation. She currently serves as Product Marketing Manager at DecisionX, leading the content and SEO strategy for its decision intelligence platform. Previously, she built global content strategies at Simetrik, Chargebee, and HighRadius, driving strong growth in organic visibility and lead conversion. Shaoli’s work focuses on making complex technology understandable, actionable, and human.

.png)

.png)

.png)

.png)

.png)

.png)

.png)